World War I - Chapter 1. The UK Strikes Back

World War Internet is coming, we are on the brink

of changes in both legislation and technology that are going to change the way

that we use the internet - both in our personal and work life.

Now when you hear World War Internet, you may be

thinking of the hackers of countries frantically trying to hack each other's

national infrastructure or trying to steal state secrets. In reality the

battles of the internet are being fought between countries and the tech giants

that have made the modern internet what it is today.

Nations around the world are desperately trying to

claw back control over the internet in their countries with varying reasons but

ultimately, they need to wrestle back the power and influence that companies

like Facebook and Google (amongst others) have over their population.

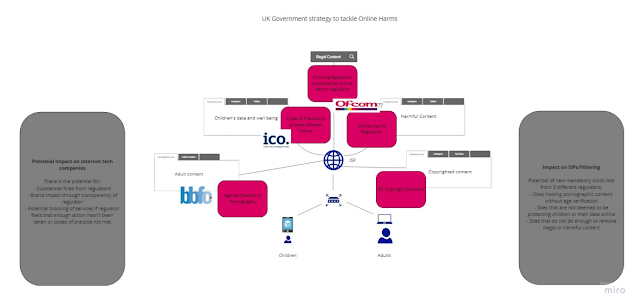

It looks like the UK will fire the first meaningful

shot against the tech giants with a combination of legislation that will

endeavour to protect their citizens from Online Harms. Over the past 2

months we have seen consultations/development for 4 different pieces of

legislation and directives that aim to "make the UK the safest place to be

online":

·

The Online Harms White

paper, setting out the plans for world leading package of measures to keep the

UK user safe online.

·

The ICO have launched

a consultation for the code of practice to help protect children online

·

Article 13 of the EU's

new copyright directive holding platform providers to account for copyright

infringement on their platforms (Putting Brexit aside for now as the UK

government have already suggested that they will enshrine this into UK law)

·

The UK government

announcing the date for their long-awaited plans for age verification of

pornographic material

So, what are the UK government hoping to get out of

these developments?

Online Harms White Paper

The Online Harms White Paper sets out the plans

from the UK government to tackle the issue of illegal and harmful content

online as public concerns grow and every day we see the impact of this content

on people around the world - including the most vulnerable children.

The main aim is to tackle the content and activity

that threatens national security or the physical safety of children.

Whilst there are already a number of initiatives in place, driven by

organisations such as the Internet Watch Foundation, government agencies

such as the National Crime Agency (via CEOP) and CTIRU (Counter Terrorism

Internet Referral Unit) - it is deemed that this activity alone is not enough

to disrupt the prevalence of illegal and harmful content appearing across

social media platforms or hosting providers.

The white paper sets out plans to appoint an

independent regulator that will produce codes of practice for technology

companies that allow their users to share or discover user generated content or

interact with each other online. Whilst the immediate link is with social

media companies, it also extends to file hosting sites, public discussion

forums, messaging services and search engines - covering the large majority of

how the public uses the internet today.

It is worth noting here that whilst the social

media companies tend to hit the headlines when their platforms are used, for

live streaming of atrocities for example - the recent annual report from the

Internet Watch Foundation indicated that a relatively low proportion of illegal

child abuse imagery is found on these platforms - it is far more likely to be

found on file hosting sites that are beyond the eyes of content moderation

tools and in countries outside of the UK. In fact, less than 2% of this type of

content is found in the UK. The biggest issue is that the UK is one of

the biggest demand generators for this kind of content, it is unlikely that the

online harms regulator is going to have any impact on that which may call for a

wider piece of work that stretches beyond the scope of the internet.

Are social media companies unfairly taking the all

the heat?

Yet despite the numbers suggesting that the big

names in social media aren't the main culprits when it comes to the illegal

content, articles such as this from the BBC https://www.bbc.co.uk/news/uk-48236580 highlight that they are still the major focus and called upon to solve

the world problems of the internet, but is it their place to do? They should

absolutely be accountable for cleaning up their own platforms and reducing the

risk of harm to their users, but they can't be held entirely responsible for

areas such as indecent imagery of children when the facts show that their

platforms are not hosting these images in the main.

Are they being targeted because they are in plain

sight, because their brands are important to them and they will do whatever is

necessary to protect their reputation? Just look at how Facebook have

spent millions of dollars in a PR campaign to demonstrate that they take

privacy seriously. It's far easier to target these organisations and make

it look like the issue is being tackled than trying to regulate those that hide

in other countries and who do not require a public brand to be

successful. It will be interesting to see how the government intend to

measure the success of the regulator as this could be a job that can never be

successfully achieved beyond the headlines about the impact on technology

giants.

But this white paper and the plans are not just

about illegal content, as pointed out earlier there is already a lot of

activity in this area - it is also about harmful content and activity that is

not illegal but can have equally devasting impact on all members of the

public.

Their vision is:

● A free, open and secure internet

● Freedom of expression online

● An online environment where companies take effective steps to keep

their users safe, and where criminal, terrorist and hostile foreign state

activity is not left to contaminate the online space

● Rules and norms for the internet that discourage harmful behaviour

● The UK as a thriving digital economy, with a prosperous ecosystem of

companies developing innovation in online safety

● Citizens who understand the risks of online activity, challenge

unacceptable behaviours and know how to access help if they experience harm

online, with children receiving extra protection

● A global coalition of countries all taking coordinated steps to keep

their citizens safe online

● Renewed public confidence and trust in online companies and services

There will be an emphasis on the technology

companies to do more themselves to prevent illegal and harmful content or

conduct appearing on their platforms rather than relying on outside agencies to

highlight this content or activity. There is also an emphasis on allowing

the public to highlight and call out inappropriate or illegal activity in an

easy way that will lead to the removal in and fast and efficient manner.

There has been criticism of the plans for

regulation, suggesting that these regulations will only make the current tech

giants more powerful as they are the only companies that can realistically

afford to enforce moderation at this scale. But there is a reason why

Facebook employ 30,000 moderators worldwide - because they have a huge number

of users and content being posted every second. Smaller companies won't

have the problem at this scale and advancements in regularly available

technology can reduce the need for human intervention - scaling to the

requirements based on volume.

The government are trying to create an environment

where the public does not accept harmful activity online, creating a culture of

calling out this kind of activity but recognising that when this is often done

today, there is a lack of action on behalf of the technology companies to

tackle all but the most extreme or illegal activity.

Some of the most popular social media platforms aren't

waiting to be told what to do, Instagram have been trialling some new features

that give users a gentle warning that they may be about to post something that

may be hurtful to others. Whether this type of gentle nudge will make a

significant change in user behaviour is yet to be seen and will not be enough

on its own - but it may make enough of a difference to those who's normal offline

behaviour would not intend harm to others, challenging their conscience

to think twice about their actions.

If successful, this may well be a global first and

potentially a framework that can be replicated around the world. But it

is not without its challenges:

·

The independent

regulator is going to have the difficult role of deciding what is harmful and

what is someone exercising their freedom of speech. There is a huge grey

area here that will split opinions and raise concerns with organisations such

as the Open Rights Group who are already expressing concerns about censorship

of the internet with existing programmes from CTIRU for example

·

There is a question

mark over how much these platforms can actually do to prevent harmful content

appearing, particularly in the context of live streaming where you can't

predict what a user is going to broadcast - we are not yet in the realms of

technology enabling a Minority Report approach to crime. Therefore, they

are always going to be reacting and playing whack-a-mole with this kind of

content as it is broadcast and shared in seconds across the world

Who will the regulator be?

It is yet to be determined but OFCOM look like they

are hiring for people that might be useful for this kind of regulation so I

wouldn't be surprised if Online Harms was taken under their remit.

What power will the new regulator have?

The new regulator will have the power to issue

fines to these platforms and hold their board members legally accountable if

they are not thought to be taking sufficient steps in reducing Online Harms on

their platforms.

Whilst these companies are used to the threat of

fines, particularly in the context of data protection - these changes could see

fines coming at them from various regulators and the cumulative financial

impact could be huge.

They will also have the power to demand that UK

ISPs block access to certain platforms, this would be a pretty bold move

against the tech giants but I would imagine would only be enforced in extreme

circumstances where platforms have been persistently warned about the content

hosted and their inability to

Whilst the UK government are the first to try and

tackle this in a meaningful way, they are of course not the first government to

want control over the content that their citizens can access - the infamous

Great firewall of China has been doing this for years and hence why privacy

campaigners have been campaigning against some of the proposed legislation in

fear that Big Brother (aka the UK government) is going to be restricting free

speech and censoring the internet for its own objectives - only allowing

internet users in the UK to see what they want them to see. This

isn’t the first time that the UK government has been questioned over its

approach to online privacy with the European Court of human rights declaring

the UK government's surveillance regime a violation of the human right for

privacy and freedom of expression.

There have also been more recent cases where governments

have taken a tough stance, a great example being the Indian government who

recently banned TikTok from being downloaded from Google Play and the Apple App

Store due to concerns about the platform hosting content of child exploitation

and pornography. Whilst this has been challenged by the Japanese company

that owns the platform, this demonstrates how some governments will take a hard

line to protect their citizens and the reputation of their country.

Whilst the UK government is taking a slightly

softer approach, the warning in the white paper is clear - the proposed

regulator for online content will have the power to demand all UK ISPs block

any platform that is not conforming.

The recent Sri Lankan terrorist acts also prompted

the Sri Lankan government to effectively "turn off" Facebook across

the country to prevent the sharing of videos of the acts as well as attempting

to prevent further reprisals aimed at the Muslim communities in the country.

Whilst this kind of action might be considered a minor inconvenience in the UK,

in Sri Lanka Facebook is the main channel in which internet users communicate

and get news - for some Facebook is the internet so turning it off is like

turning off the internet.

So, is there a balance between protecting the

internet users in the UK from online harm, privacy concerns and censorship?

Whilst there are concerns from the privacy groups

already mentioned, there must be a line drawn somewhere and it is clear that a

laissez faire attitude to the tech giants has had an enormous impact on

democracy, mental health and the access of illegal or harmful content.

For all the concerns about the social media giants, it is clear that they have

no desire to have their platforms play a part in any negative activities in these

areas either - but as commercial organisations there is always going to be a

challenge for them to maintain their profit margins whilst keeping their users

safe from online harms, particularly in a world where every country will have a

different view on what is acceptable and even what it is illegal.

Social media hits the headlines for the wrong

reasons in every atrocity that occurs globally, from the US to New Zealand to

Sri Lanka. It is clear that governments around the world do not trust the

platforms to be able to control the content they host or the platform they

provide to those that wish to do wrong and with good reason - we are yet to see

any of them demonstrate how they intend to do this beyond manual reporting and

review helped by relatively simple algorithms which are in no shape to deal

with the volume and increasingly blurred lines of what is illegal vs harmful.

The experimentation by Instagram could be an

indicator of the way that these platforms may need to adapt to encourage their

users to be more responsible. This obviously will not be a

silver bullet though, one of the reasons people behaviour differently and

potentially more hurtful online is that they can benefit from anonymity and all

the time that they can hide behind that anonymity they will continue to behave

in a way that they would not do offline.

A bold move by any regulator would be to make

anonymisation obsolete, could the new age verification for pornographic content

be a precursor to identity verification for any online service? Whilst

there would be many objections from data privacy groups and concerns over a

"big brother" approach from the government - it would certainly have

a massive positive impact on user behaviour on these platforms (not to mention

a reduction in the number of accounts boasted by the platforms).

In the next chapter I'll look at the ICO's new code of practice for age approriate design.

Comments

Post a Comment